Struct rustml::nn::NeuralNetwork

[−]

[src]

pub struct NeuralNetwork {

// some fields omitted

}A simple feed forward neural network with an arbitrary number of layers and one bias unit in each hidden layer.

Neural networks are a powerful machine learning approach which are able to learn complex non-linear hypothesis, e.g. for regression or classification task.

Example

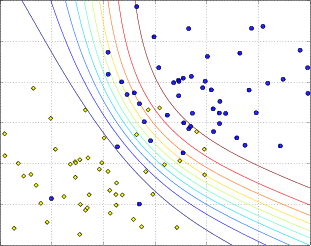

In the following example a toy dataset is generated from two Gaussian sources. Then, a neural network is trained to build a hypothesis which seperates the points of the two sources. The decision boundary of the hypothesis and the points of the toy dataset are shown in the following plot.

#[macro_use] extern crate rustml; use std::iter::repeat; use rustml::nn::*; use rustml::*; use rustml::opt::empty_opts; // create a toy dataset let seed = [1, 2, 3, 4]; let n = 50; let x = mixture_builder() .add(n, normal_builder(seed).add(0.0, 0.5).add(0.0, 0.5)) .add(n, normal_builder(seed).add(1.0, 0.5).add(1.0, 0.5)) .as_matrix() .rm_column(0); // create the labels let labels = Matrix::from_it( repeat(0.0).take(n).chain(repeat(1.0).take(n)), 1 ); let n = NeuralNetwork::new() .add_layer(2) // input layer with two units .add_layer(3) // hidden layer with two units .add_layer(1) // output layer .gd(&x, &labels, // use gradient descent to optimize the network empty_opts() .alpha(20.0) // learning reate is 20 .iter(500) // number of iterations is 500 );

Methods

impl NeuralNetwork

fn new() -> NeuralNetwork

Creates a new neural network.

The network does not contain any layer. To add layers use the

method add_layer.

Example

use rustml::nn::NeuralNetwork; // create a neural network ... let n = NeuralNetwork::new() .add_layer(3) // ... with 3 units in the input layer .add_layer(10) // ... 10 units in the hidden layer .add_layer(4); // and 4 units in the output layer

fn add_layer(&self, n: usize) -> NeuralNetwork

Adds an layer to the network with the specified number of units.

The first layer that is added represents the input layer. The following layers that are added represent the hidden layers and the last layer that is added automatically becomes the output layer.

For each layer that is added (except the input layer) random parameters are generated (i.e. the weights of the connections between the units / neurons) which connect the previous layer with the new layer.

Panics if n == 0.

Example

use rustml::nn::NeuralNetwork; // create a neural network ... let n = NeuralNetwork::new() .add_layer(3) // ... with 3 units in the input layer .add_layer(10) // ... 10 units in the hidden layer .add_layer(4); // and 4 units in the output layer

fn set_params(&self, layer: usize, params: Matrix<f64>) -> NeuralNetwork

Sets the parameters (i.e. the weights) which connect the layer at

depth n with the layer at depth n + 1.

The input layer has depth 0, the first hidden layer has depth 1 and so on.

Panics if the layer does not exist or the dimension of the parameter matrix which is replaced does not match with the dimension of the matrix containing the new parameters.

Example

use rustml::*; use rustml::nn::NeuralNetwork; // create a neural network ... let n = NeuralNetwork::new() .add_layer(3) // ... with 2 units in the input layer .add_layer(2) // ... 2 units in the hidden layer .add_layer(1) // and 1 units in the output layer .set_params(0, mat![ // weights from the first, second and third unit of the first // layer (input layer) to he first unit in the second layer 1.0, 0.9, 0.2; // weights from the first, second and third unit of the first // layer to the second unit in the second layer -0.3, 0.2, 0.5]) .set_params(1, mat![ // weights from the bias unit, the first and second units of the // second layer to the unit in the output layer 0.1, -0.3, -0.1]);

fn input_size(&self) -> usize

Returns the number of input units.

Panics if no input layer exists.

Example

use rustml::nn::NeuralNetwork; // create a neural network ... let n = NeuralNetwork::new() .add_layer(3) // ... with 3 units in the input layer .add_layer(10) // ... 10 units in the hidden layer .add_layer(4); // and 4 units in the output layer assert_eq!(n.input_size(), 3);

fn output_size(&self) -> usize

Returns the number of output units.

Panics of no output layer exists.

Example

use rustml::nn::NeuralNetwork; // create a neural network ... let n = NeuralNetwork::new() .add_layer(3) // ... with 3 units in the input layer .add_layer(10) // ... 10 units in the hidden layer .add_layer(4); // and 4 units in the output layer assert_eq!(n.output_size(), 4);

fn layers(&self) -> usize

Returns the number of layers.

Example

use rustml::nn::NeuralNetwork; // create a neural network ... let n = NeuralNetwork::new() .add_layer(3) // ... with 3 units in the input layer .add_layer(10) // ... 10 units in the hidden layer .add_layer(4); // and 4 units in the output layer assert_eq!(n.layers(), 3);

fn predict(&self, input: &Matrix<f64>) -> Matrix<f64>

Computes the output of the neural network for the given inputs.

Each row in the input matrix represents an observation for which the neural network computes the output value.

The implementation uses matrix multiplications that are optimized via BLAS.

Example

use rustml::*; use rustml::nn::NeuralNetwork; // parameters from the input layer to the first hidden layer let p1 = mat![ 0.1, 0.2, 0.4; 0.2, 0.1, 2.0 ]; // parameters from the hidden layer (+ bias unit) to the output layer let p2 = mat![ 0.8, 1.2, 0.6; 0.4, 0.5, 0.8; 1.4, 1.5, 2.0 ]; let n = NeuralNetwork::new() .add_layer(3) // 3 units in the input layer .add_layer(2) // 2 units in the hidden layer (without bias) .add_layer(3) // 3 units in the output layer .set_params(0, p1) .set_params(1, p2); // observations let x = mat![ 0.5, 1.2, 1.5; // features of the first observation 0.3, 1.1, 1.0; // features of the second observation 0.7, 0.9, 1.8 // features of the third observation ]; // expected target values let t = mat![ 0.90270, 0.82108, 0.98771; // output for the first observation 0.89349, 0.80946, 0.98494; // output for the second observation 0.90529, 0.82427, 0.98840 // output for the third observation ]; assert!(n.predict(&x).similar(&t, 0.00001));

fn derivatives(&self, examples: &Matrix<f64>, targets: &Matrix<f64>) -> Vec<Matrix<f64>>

fn update_params(&mut self, deltas: &[Matrix<f64>])

Updates the parameters of the network.

Each matrix in deltas is added to the corresponding matrix

of parameters of the network, i.e. the first matrix which

contains the parameters from the first layer to the second layer,

the second matrix is added to the parameters which contains the

parameters from the second layer to the third layer and so on.

fn params(&self) -> Vec<Matrix<f64>>

Returns the parameters of the network.